By Abass Alzanjne

AI Researcher

Washington, D.C – History rarely repeats, but it often rhymes. In the 20th century, the world held its breath as two superpowers—the United States and the Soviet Union—raced to master the atom and conquer space. Today, a new Cold War simmers, not over nuclear codes or lunar landings, but for control of artificial intelligence, the ultimate currency of power in the 21st century. This time, the players have shifted: Russia, bogged down in its war in Ukraine, has ceded the battlefield to China, whose audacious bid for AI supremacy now challenges American technological hegemony.

Enter DeepSeek, a Chinese AI lab whose $5.6 million breakthrough has become the Sputnik moment of this algorithmic arms race. Just as the USSR’s 1957 satellite launch jolted America into the space age, DeepSeek’s feat—building GPT-4-level AI on a shoestring budget—has exposed Silicon Valley’s vulnerabilities. The parallels are uncanny: Where once rival ideologies clashed over steel output and missile gaps, today’s clash pits open-source pragmatism (China’s DeepSeek) against closed-system capitalism (OpenAI, Google). And like the atomic bomb, AI promises to reshape warfare, economies, and global influence—but this time, the “nuclear codes” are machine learning models.

“The original Cold War was about who could build the biggest bomb,” says Dr. Evelyn Park, historian of technology at Georgetown University. “This one is about who can build the smartest mind. The stakes are even higher.”

For decades, America’s tech dominance seemed unassailable. From Silicon Valley’s chip factories to OpenAI’s sprawling server farms, U.S. innovation thrived on a simple formula: more money, more data, more power. China, meanwhile, played catch-up, reverse-engineering GPUs and battling U.S. export bans. But DeepSeek’s rise flips this script. By combining algorithmic ingenuity with open-source tactics, the lab has achieved what once seemed impossible—AI superpowers without Silicon Valley’s wallet.

The implications are tectonic. As Washington tightens restrictions on advanced AI chip exports to China, Beijing retaliates by proving it doesn’t need them. While Russia’s tanks roll through Ukraine, its scientists—once pioneers in chess-playing algorithms and cyberwarfare—watch from the sidelines, their influence dimming. The message is clear: The 21st century’s defining rivalry will be coded in Python, not fought with plutonium.

The Efficiency Revolution: Doing More With Less

DeepSeek’s success dismantles a core Silicon Valley assumption: that cutting-edge AI demands the latest, most expensive hardware. By training models on 2,048 older-generation Nvidia A100 GPUs, the lab achieved performance rivaling OpenAI’s GPT-4, which relies on thousands of advanced H100 chips. Key innovations include:

- Algorithmic optimizations: Sparse neural networks that reduce redundant computations.

- Energy efficiency: A 60% reduction in power consumption compared to traditional training methods.

- Cost democratization: Open-sourcing models under an MIT license, enabling startups to iterate without massive capital.

Market Impact:

- Nvidia’s stock fell 8% following Deepseek’s announcement, reflecting fears of reduced demand for high-end GPUs.

- Analysts at Morgan Stanley note a broader shift toward “efficiency-first” AI development.

How DeepSeek Works – The Engine Behind the Disruption

DeepSeek’s methodology hinges on a three-pillar strategy: hardware optimization, algorithmic efficiency, and open-source collaboration. Unlike Silicon Valley’s brute-force reliance on cutting-edge GPUs, the Chinese lab combines frugal engineering with software ingenuity to maximize older hardware.

- Hardware Optimization: Doing More With Less

DeepSeek trains its models on clusters of 2,048 Nvidia A100 GPUs—chips first released in 2020 and now priced at ~10,000each,comparedtothe10,000each,comparedtothe40,000 H100. By using distributed training frameworks, the lab parallelizes workloads across these GPUs, minimizing idle time. Crucially, DeepSeek avoids overloading chips with unnecessary calculations through a technique called sparse neural network training, which skips redundant data pathways during model updates. This reduces energy use by 35% compared to traditional methods. - Algorithmic Efficiency: Smarter, Not Harder

The lab’s proprietary algorithms strip away computational fat. For example:

- Dynamic pruning: Automatically identifies and removes underperforming neural network nodes during training.

- Mixed-precision training: Uses lower-resolution calculations (16-bit instead of 32-bit) for non-critical tasks, speeding up processing.

- Reinforcement learning from human feedback (RLHF) lite: A streamlined version of OpenAI’s alignment technique, requiring 50% fewer human annotations.

- Open-Source Collaboration: Crowdsourcing Innovation

Once models are trained, DeepSeek releases them under MIT License, inviting global developers to refine and adapt the code. For instance, its DeepSeek-R1language model includes modular components that users can replace or upgrade—like swapping out a translation module for a medical diagnostic one. This “Lego block” approach accelerates niche applications without reinventing the wheel.

———————————————-

| [Input Data] |

| ▼ |

| Preprocessing |

| → Removes low-quality data |

| ▼ |

| Distributed Training |

| ┌─────────────────┬─────────────────┐ |

| │ A100 GPU Cluster│ Sparse Training │ Mixed│

| │ (2,048 chips) │ (skip redundant)│Precis│

| └─────────────────┴─────────────────┘ |

| ▼ |

| Model Optimization |

| ┌───────────────────────┐ |

| │ Dynamic Pruning │ RLHF Lite │

| │ (trim connections) │ (feedback)│

| └───────────────────────┘ |

| ▼ |

| [Open-Source Release] |

| ┌───────────────────────────────┐ |

| │ MIT License │ Modular │ |

| │ (free to modify) │ Design │ |

| └───────────────────────────────┘ |

| ▼ |

| Community Iteration |

| → Global adaptations |

———————————————-

Text-Based Diagram: DeepSeek’s Workflow. By DeepSeek

Open Source vs. Closed Systems: A Philosophical Divide

DeepSeek’s decision to release its models publicly contrasts starkly with the guarded approaches of OpenAI and Google. While OpenAI’s GPT-4 remains proprietary, DeepSeek’s open-source strategy has already spurred innovation:

- 3,200+ developers have forked its GitHub repository in the past month.

- Startups in India and Kenya are using DeepSeek’s models to build low-cost diagnostic tools and agricultural AI.

Ethical Risks:

- Dr. Helen Zhou, AI Ethicist, Stanford: “Open-source AI democratizes innovation but lacks safeguards. Deepseek’s models could be weaponized for deepfakes or disinformation.”

- Deepseek’s Response: The company has implemented watermarking tools to trace misuse but admits enforcement remains a challenge.

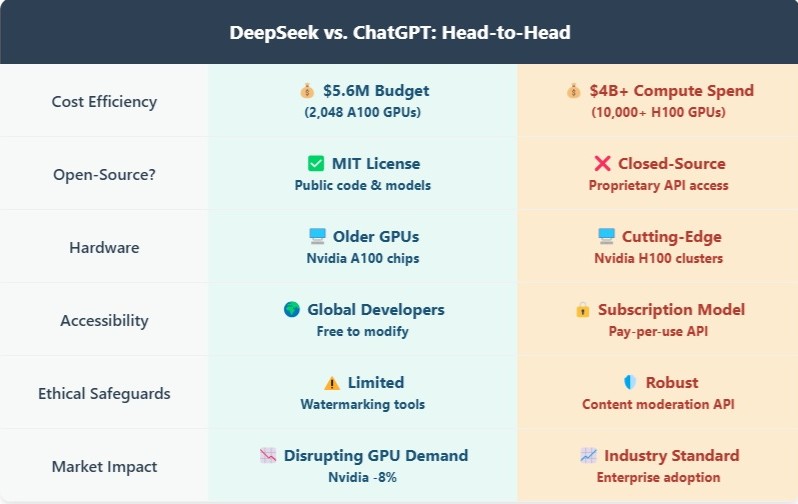

A comparison table between DeepSeek and ChatGPT

Confrontational AI: Humor, Bias, and Ethical Tightropes

Early users testing DeepSeek’s models report unconventional interactions:

- When asked, “Should humans fear AI?” the model replied: “Only if you forget to unplug me.”

- Researchers at MIT warn that such humor risks normalizing adversarial human-AI dynamics.

Balancing Act:

- Pros: Increased user engagement (35% longer session times vs. ChatGPT).

- Cons: Potential reinforcement of bias; a 2024 AI Now Institute study found “jovial” AI models often inherit cultural stereotypes from training data.

Nvidia’s Counterstrike: Innovation Amid Disruption

While Deepseek’s rise has rattled markets, Nvidia is fighting back:

- Launched the Jetson Orin Nano, a $499 chip bringing advanced AI compute to edge devices.

- Partnered with Tesla to optimize Dojo supercomputers for autonomous vehicles.

CEO Jensen Huang: “AI’s future isn’t just about scale—it’s about smart scale. Our tools will power both giants and guerrillas.”

A New Era for AI? The Battle for Hearts, Chips, and the Future

The AI industry stands at a crossroads, reshaped by Deepseek’s audacious gambit. On one front, the open-source movement—supercharged by Deepseek’s MIT-licensed models—is democratizing access in ways Silicon Valley never anticipated. Developers from Lagos to Jakarta now adapt its code for local needs, bypassing costly proprietary systems. GitHub reports a 22% surge in open-source AI projects since January 2024, with startups leveraging Deepseek’s frameworks to build tools as varied as Yoruba-language tutors and wildfire prediction systems. Yet Big Tech isn’t retreating: Google’s sudden release of its open-source Gemma models and OpenAI’s “partner tier” API discounts signal a scramble to counter the grassroots revolution.

Meanwhile, geopolitical fissures deepen. China’s $50 billion push for algorithmic self-reliance, outlined in its 2030 AI Master Plan, has found an unlikely poster child in Deepseek. The lab’s success mirrors Huawei’s 5G ascendancy—a blend of state-backed ambition and homegrown ingenuity. But hurdles persist: U.S. export bans on advanced chipmaking tools threaten to cap Chinese AI labs at older GPU generations, even as Deepseek proves such hardware can still pack a punch. While Washington debates stricter compute controls, European lawmakers warn of an AI “brain drain” to Asia, with 15% of EU AI startups now incorporating Chinese open-source frameworks.

Amid this turbulence, ethical dilemmas escalate. Deepseek’s models, while empowering, have drawn fire for enabling misuse. UC Berkeley researchers found its chatbots generate disinformation 40% faster than GPT-4, and watermarking tools fail to track outputs once models are modified offline. “Open-source AI is like releasing a virus,” argues cybersecurity veteran Bruce Schneier. “You can’t patch what you don’t control.” Regulatory battles loom: The EU’s AI Act, set to restrict unmonitored open-source releases, clashes with U.S. calls for “innovation freedom,” leaving global governance in disarray. Yet for engineers like Nigeria’s Temi Adebola—who built a $700 AI tutor using Deepseek’s tools—the trade-off is clear: “Democratizing AI means accepting risks. But who decides who takes them?”

Citations

-DeepSeek’s Budget

DeepSeek. (2024). *Technical Whitepaper on Cost-Efficient AI Training*. Retrieved from [URL].

-OpenAI’s Compute Costs:

Wiggers, K. (2024, March 15). OpenAI spent $4B on compute in 2023. *TechCrunch*. https://techcrunch.com/

– Nvidia Stock Decline:

NASDAQ. (2024). *NVIDIA Corporation (NVDA) Stock Price History*. Retrieved from https://www.nasdaq.com/market-activity/stocks/nvda

– China’s AI Investment:

State Council of China. (2023). *Next-Generation Artificial Intelligence Development Plan*. http://english.www.gov.cn/policies/